The Rise of Multimodal AI: How Models That See, Hear, and Read Are Changing Everything

I still remember the first time I uploaded an image to ChatGPT and asked it to explain what it was looking at. The response wasn’t just accurate—it was insightful. The AI didn’t just list objects; it understood context, relationships, even the mood of the scene. That moment felt like crossing a threshold into something fundamentally new.

Thank you for reading this post, don't forget to subscribe!

We’ve come a long way from the early days of chatbots that could only process text. Today’s AI doesn’t just read your words—it sees your photos, hears your voice, watches your videos, and somehow makes sense of all of it at once. This isn’t borrowed from a sci-fi movie. It’s happening right now, in tools millions of people use every day. And it has a name: multimodal AI.

If you’ve ever asked Google Lens what kind of plant you’re looking at, had a voice conversation with your phone’s assistant, or used an app that generates images from your descriptions, you’ve already stepped into this world. But here’s the thing most people don’t realize: we’re still in the early chapters of this story. What comes next could reshape how we work, learn, create, and communicate in ways we’re only beginning to imagine.

What Makes Multimodal AI Different?

Let’s get one thing straight: traditional AI was basically a specialist. You had language models that could write brilliantly but couldn’t tell a cat from a couch in a photo. You had image recognition systems that could identify thousands of objects but couldn’t explain why any of it mattered. Each model was locked in its own sensory bubble.

Multimodal AI breaks down those walls entirely. These systems can process text, images, audio, video, and other data types simultaneously—not just as separate inputs, but as interconnected pieces of a larger puzzle. Imagine showing an AI a photo of your grandmother’s handwritten recipe, asking it to read the ingredients, identify what dish it is, suggest modern substitutions, and then walk you through the cooking process with step-by-step voice instructions. That’s multimodal AI in action.

The breakthrough isn’t just technical—it’s almost philosophical. For AI to truly understand our world, it needs to experience information the way we do: through multiple senses working together. When you’re at a concert, you’re not just hearing music or seeing performers. You’re experiencing both simultaneously, plus the crowd’s energy, the lighting, the atmosphere. Multimodal AI tries to capture that kind of holistic understanding.

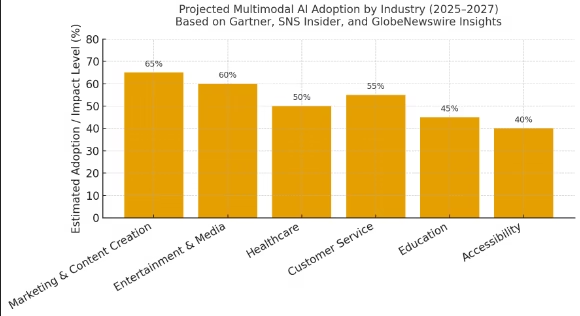

According to research from Gartner, about 40% of generative AI solutions will be multimodal by 2027. That’s not a gradual shift—it’s a seismic change happening right before our eyes. The question isn’t whether this technology will become mainstream, but how quickly.

Related topic: The Rise of Multimodal AI

The Big Players Reshaping the Game

The race to build the best multimodal AI has become one of tech’s most intense competitions. Three major players have emerged as frontrunners, each taking a slightly different approach. Let me walk you through what makes each one special.

OpenAI’s GPT-4o: The All-Rounder That Moves Fast

When OpenAI launched GPT-4o in May 2024 (yes, that “o” stands for “omni”), they weren’t just upgrading their existing model—they were reimagining what AI could do. This thing processes text, images, audio, and video in real-time, and honestly? The speed is startling. It’s twice as fast as GPT-4 Turbo, which means you can have actual back-and-forth conversations without those awkward pauses.

But speed is only part of the story. GPT-4o can engage in voice conversations that feel surprisingly natural. You can interrupt it mid-sentence, and it adjusts. It picks up on tone and emotion in your voice. One user described talking to it as feeling “less like prompting a machine and more like explaining something to a colleague who’s having a really good day.”

The visual analysis is sharp too. Show it a complex chart, a meme you don’t understand, or a photo of your car’s weird dashboard warning light, and it’ll break down what it’s seeing with the kind of detail that makes you think, “Wait, how did it know that?”

Google’s Gemini 1.5 Pro: The Model with a Photographic Memory

Google took a different bet with Gemini 1.5 Pro, and honestly, it paid off. Instead of just focusing on speed, they went for scale—specifically, the scale of information the model can handle at once. We’re talking about a context window of up to 2 million tokens. In human terms? That’s roughly 1.4 million words, or about 40 hours of audio, or hundreds of documents.

Why does this matter? Imagine you’re a researcher analyzing a two-hour documentary, comparing it against dozens of academic papers, historical footage, and interview transcripts. With most AI systems, you’d need to break this into chunks and process it bit by bit, losing the big picture. Gemini can swallow the whole thing and still understand how minute 17 connects to minute 97.

The video understanding capabilities are particularly impressive. Gemini doesn’t just analyze individual frames—it tracks narratives, follows objects across scenes, and understands temporal relationships. One educator told me they used it to analyze an entire semester’s worth of lecture recordings, pulling out key concepts and creating study guides that actually captured the flow of the course.

Anthropic’s Claude 3.5: The Thoughtful Analyst

Claude 3.5 Sonnet (that’s the latest version from Anthropic, released mid-2024) carved out its niche by being the careful, methodical thinker of the bunch. While it doesn’t process audio natively like GPT-4o, it absolutely excels at visual analysis that requires precision and nuance.

Medical professionals have particularly gravitated toward Claude. Why? Because in fields where mistakes have consequences, you want an AI that thinks before it speaks. When analyzing an X-ray or financial chart, Claude tends to acknowledge uncertainty rather than confidently stating something wrong. It’s the difference between “This appears to show…” and “This definitely is…”

One radiologist I spoke with described it as “the attending physician who takes an extra moment to consider before making the call.” In healthcare, that extra moment matters.

The Wider Landscape

Beyond the big three, the multimodal AI ecosystem is exploding with innovation. Meta’s ImageBind project is experimenting with binding six different types of sensory data together—not just sight and sound, but thermal imaging, depth perception, and even motion data. Microsoft has been steadily integrating multimodal capabilities across Office, making it possible to describe the chart you want in plain English and have it generated automatically.

The multimodal AI market hit $740 million in 2024, driven largely by content creators, marketers, and businesses hungry for tools that can handle their increasingly complex workflows. And frankly, that number feels conservative given how quickly adoption is accelerating.

The Technical Magic That Makes It Work

Okay, I know “technical” might make your eyes glaze over, but stay with me. Understanding how multimodal AI actually works helps explain why it’s so powerful—and why it sometimes fails in interesting ways.

One Language to Rule Them All

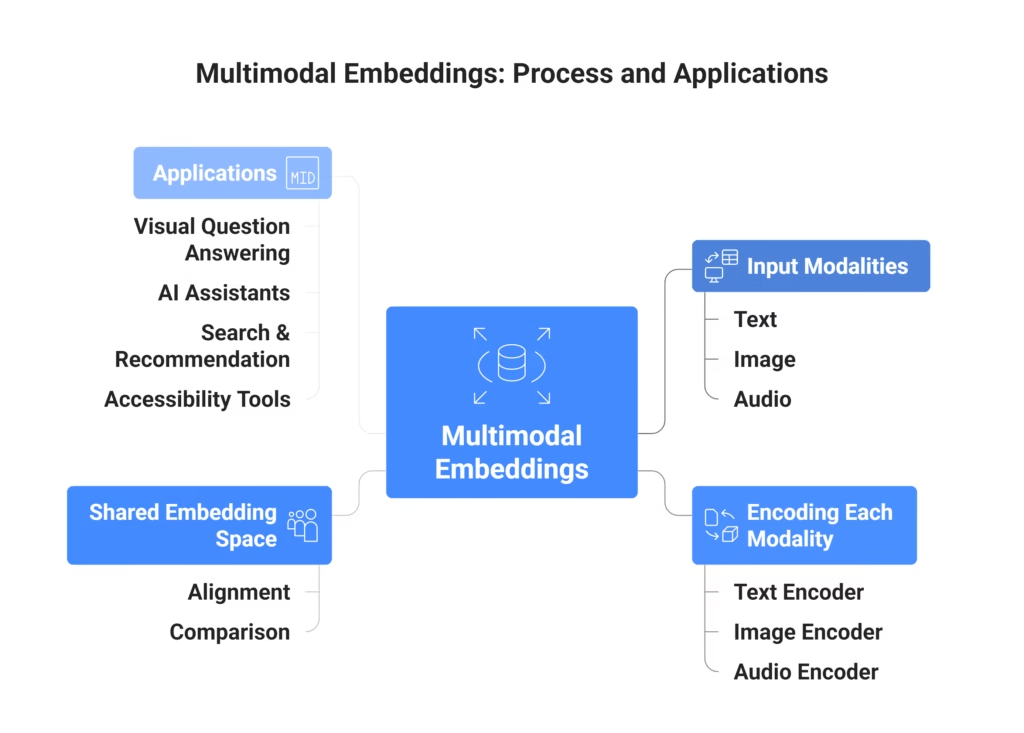

Here’s the core insight: modern multimodal AI doesn’t treat different data types as fundamentally separate things. Instead, it converts everything—images, text, audio, video—into the same mathematical language called embeddings.

Think of embeddings as a universal translator. Whether you feed the system a photo of a golden retriever, the word “dog,” or the sound of barking, the AI maps all of these into points in the same mathematical space. Related concepts end up close together in this space, even if they started as completely different data types.

This is why multimodal AI can do things that seem almost magical. When you ask it to “find images that match this song’s vibe,” it’s not randomly guessing. It’s comparing the mathematical representations of the music and images in that shared space, finding genuine connections.

Attention: The Secret Sauce

The other breakthrough is something called cross-modal attention mechanisms. This is what allows the AI to figure out which parts of different inputs are relevant to each other.

Imagine watching a cooking video while reading the recipe. Your brain automatically links the visual action of dicing onions with the text instruction that says “dice the onion.” Multimodal AI does something similar through attention mechanisms—computational processes that help the model focus on relevant connections between different data streams.

When analyzing a complex scene, the attention mechanism helps the AI understand that the text on a street sign relates to the building behind it, or that the urgency in someone’s voice connects to the concerning images they’re showing.

Training at Scale

These models learn by processing absolutely massive datasets—billions of examples of different data types appearing together. Images paired with captions. Videos paired with transcripts. Audio paired with descriptions. The model learns to predict masked portions of one modality based on clues from others, gradually building an understanding of how different information types relate.

The computational cost is mind-boggling. Training GPT-4 reportedly cost over $100 million in computing resources alone. But this enormous investment is what enables these systems to handle the complexity of real-world information in all its messy, multimedia glory.

Where This Technology Is Already Changing Lives

Theory is interesting. Real-world impact is what matters. Let me show you where multimodal AI is already making a tangible difference in people’s lives and industries.

Healthcare: Saving Lives Through Better Decisions

Healthcare might be the most consequential application of multimodal AI—and I mean that literally, as in lives are being saved.

Modern medicine generates an overwhelming volume of diverse data. A single patient might have X-rays, MRI scans, genetic test results, blood work, doctor’s notes, family history, and weeks of vital sign monitoring. Traditionally, piecing this together required multiple specialists and a lot of time. Multimodal AI can integrate it instantly.

Let me give you a concrete example. A patient comes in with vague symptoms—fatigue, persistent cough, some weight loss. In the past, a doctor might order tests sequentially, each one taking days and possibly missing connections. Now, a multimodal AI system can simultaneously analyze chest X-rays, compare them against the patient’s medical history, cross-reference with genetic markers, review previous doctor’s notes for context clues, and flag patterns that match rare conditions humans might miss.

The National Institutes of Health has invested heavily in multimodal AI research for exactly this reason. Their initiatives focus on integrating imaging data, genomic information, and clinical records to improve diagnostic accuracy and personalize treatments.

One oncologist told me about using multimodal AI for treatment planning. The system analyzed tumor images from CT scans, integrated genomic data about specific mutations, reviewed thousands of clinical trial results, and suggested treatment protocols tailored to that patient’s unique cancer profile—all while explaining its reasoning in language the medical team could evaluate.

The numbers back this up. According to the American Medical Association, physician adoption of AI tools jumped from 38% in 2023 to 66% in 2024. That’s not hype—that’s doctors seeing real value in their daily practice.

Education: Finally, Teaching That Adapts to Each Student

I’ve always felt that traditional online learning missed something crucial. It couldn’t adapt to how different students learn best. Some people are visual learners. Others need to hear explanations. Some need to work through problems hands-on. Most platforms just… served the same content to everyone.

Multimodal AI changes that equation fundamentally.

Modern educational AI can observe students through multiple channels simultaneously. It watches them work through problems via video. It analyzes their written explanations. It processes their verbal questions and even gauges their engagement through facial expressions and tone of voice. By integrating these signals, the system builds a nuanced understanding of each student’s learning style, challenges, and progress.

Here’s what this looks like in practice: A high school student is struggling with algebra. The AI notices (through video) that she keeps drawing pictures to solve problems, recognizes (through audio) frustration in her voice when reading equations, and sees (through text analysis) that her written work shows solid logical thinking but gets lost in notation.

The system then generates a customized lesson that emphasizes visual representations of algebraic concepts, uses minimal notation until concepts click, and includes lots of diagram-based practice problems. It’s not replacing the teacher—it’s giving teachers superhuman ability to personalize instruction for 30 different students simultaneously.

Language learning is another area where multimodal AI shines. Apps can now provide pronunciation feedback by analyzing your audio, explain grammar through text, demonstrate usage through images or video clips, and engage you in natural conversations that feel progressively more realistic. This multi-sensory approach mimics how we naturally acquire languages.

Accessibility: Technology That Actually Includes Everyone

For people with disabilities, multimodal AI isn’t just convenient—it’s life-changing in the most literal sense.

For people with visual impairments: Modern AI can analyze environments through smartphone cameras and provide detailed, real-time audio descriptions. Not just “there’s a door ahead” but “wooden door with brass handle, slightly ajar, approximately 10 feet ahead and 15 degrees to your right. There’s a rubber doorstop propping it open.”

The system can read handwritten notes, describe artwork in museums with the kind of detail that captures mood and meaning, help navigate complex spaces like airports, and even recognize faces so users know who’s approaching.

For people with hearing impairments: Real-time captioning has existed for years, but multimodal AI takes it further. The system identifies who’s speaking by analyzing video, notes emotional tone through facial expressions, adds context like [sound of applause] or [door slams], and even detects when someone says your name in a crowded room.

For people with motor impairments: Voice-activated multimodal assistants enable control of complex applications that previously required precise manual input. Instead of navigating through layers of menus, users can simply show the system what they want and describe their intent verbally.

For people with cognitive disabilities: Multimodal AI can transform complex text into simpler language, add visual explanations, provide audio narration, and break information into manageable chunks based on the user’s comprehension level. One parent told me about her teenage son with autism using a multimodal learning app that adapts in real-time to his processing speed and preferences, making homework finally feel manageable.

These aren’t theoretical benefits. They’re removing real barriers that have excluded people from full participation in education, work, and society.

Creative Industries: New Tools for Human Imagination

The creative world’s relationship with AI is complicated—equal parts excitement and anxiety. There are legitimate concerns about AI replacing human creativity, which deserve serious discussion. But there’s also no denying that these tools are enabling new forms of artistic expression.

Film and video: Tools like Runway Gen-2 and other video generation systems let independent creators produce scenes that would’ve required expensive equipment, large crews, and weeks of work. A solo filmmaker can now visualize a scene, generate it from a text description, refine it, and integrate it into their project—all from a laptop.

I spoke with a documentary filmmaker who used multimodal AI to recreate historical scenes for which no footage exists. She described the period through text, provided reference images of clothing and architecture, and the system generated remarkably accurate visual sequences that brought her narrative to life.

Music and soundscapes: Composers can describe the emotional tone they want (“triumphant but with an undercurrent of melancholy”), reference visual scenes the music should accompany, and generate multiple variations to find what resonates. Sound designers create audio effects by describing them, saving hours of recording and editing.

Design and marketing: Marketing teams use multimodal AI to generate complete campaigns—creating hero images, writing copy that matches the visual tone, producing video ads, and even suggesting color schemes based on psychological principles. The system analyzes successful campaigns, understands brand voice from existing content, and generates variations for A/B testing.

One creative director described it as “having a junior designer with infinite patience and no ego” who can rapidly prototype ideas, letting the human team focus on strategy and refinement rather than execution.

Interactive experiences: Game developers and writers are building adaptive narratives that respond to player choices with generated dialogue (both text and voice), appropriate visuals, and dynamic music that shifts based on the story’s emotional arc. The experience feels more alive because it’s not just following pre-scripted paths.

Business Operations: Efficiency Meets Intelligence

Across industries, businesses are deploying multimodal AI to solve practical problems and streamline operations in ways that directly impact their bottom line.

Customer support: Advanced support systems now analyze customer messages, process attached photos of damaged products, review account histories, and generate comprehensive responses that address issues holistically. Some handle video calls, analyzing both verbal complaints and visual demonstrations of problems.

One e-commerce company reported reducing resolution time by 60% after implementing multimodal support AI—not by replacing humans, but by giving human agents better tools to understand and solve problems quickly.

Quality control: Manufacturing plants use multimodal systems that combine visual inspection of products moving along assembly lines, audio analysis to detect abnormal machine sounds, thermal imaging to identify overheating components, and integration with production data to spot patterns indicating systemic issues.

Market research: Companies analyze consumer sentiment by simultaneously processing social media posts (text and images), video reviews, podcast discussions, and sales data. This multi-channel approach reveals insights that single-source analysis misses—like how product photography affects purchase decisions differently than written descriptions.

Retail innovation: Some clothing stores have deployed smart fitting rooms that use multimodal AI to analyze how clothes look on customers (through video), gather verbal feedback, suggest complementary items based on style preferences, and predict likely purchases based on browsing patterns.

One retail executive told me, “We’re not trying to replace sales associates. We’re giving them superpowers—the ability to remember every item in inventory, understand every customer’s preferences, and make perfect recommendations.”

The Problems We Need to Talk About

Look, I’m excited about multimodal AI. That’s probably obvious by now. But I’d be doing you a disservice if I didn’t address the serious challenges and risks this technology brings. These aren’t theoretical concerns—they’re happening right now, affecting real people.

Bias That Multiplies Across Modalities

Single-modality AI already struggles with bias. Language models reflect societal prejudices present in their training data. Image recognition systems perform worse on underrepresented groups. Multimodal AI potentially amplifies these problems by learning biased associations across different data types.

Here’s what worries me: If training data disproportionately shows certain professions associated with specific demographics across both images and text, the multimodal model might reinforce these stereotypes more powerfully than a text-only system. It’s learning these associations from multiple angles simultaneously, which can make the bias harder to detect and correct.

When these systems make decisions affecting people’s lives—hiring recommendations, loan approvals, medical diagnoses—biased outputs cause genuine harm. A resume analysis tool that’s influenced by multimodal training might unconsciously downweight candidates based on factors that have nothing to do with qualifications.

Solving this requires diverse training data, rigorous auditing, and transparency about limitations. Some organizations are developing multimodal fairness metrics, but honestly, we’re still early in understanding how to measure and mitigate these biases effectively.

Privacy in a World Where AI Sees Everything

Multimodal AI’s ability to process video, audio, and images raises privacy concerns that go way beyond what we faced with text-based systems. These models can identify individuals from photos, analyze emotions from facial expressions, recognize voices, and track behavior across multiple data sources.

The same capability that helps visually impaired people navigate could be used for mass surveillance without consent. The technology that enables better accessibility features could also enable invasive tracking and profiling.

I don’t have easy answers here. Clear legal frameworks, strong encryption, meaningful consent mechanisms, and transparency about data collection are essential. But technology consistently moves faster than policy, leaving important questions about appropriate use largely unanswered.

The Environmental Cost We Can’t Ignore

Training large multimodal models consumes staggering amounts of energy. We’re talking about millions of dollars in computing costs and associated carbon emissions that contribute meaningfully to climate change.

As these systems scale and become more widely deployed, their environmental footprint grows harder to ignore. Some companies are investing in renewable energy for data centers and developing more efficient architectures, but the fundamental tension between capability and sustainability remains unresolved.

Every time we use these tools, there’s an environmental cost. That doesn’t mean we shouldn’t use them—but we should be thoughtful about when their benefits justify their impact.

Deepfakes and the Battle Against Misinformation

Multimodal AI can create disturbingly realistic fake content. Videos of people saying things they never said. Fabricated images of events that never happened. Synthetic audio recordings that perfectly mimic someone’s voice.

This isn’t a theoretical future threat—it’s happening now. Political deepfakes. Insurance fraud with AI-generated accident scenes. Impersonation scams using voice cloning. Legal evidence that might be fabricated.

Detection tools are improving, but we’re in an arms race between generation and detection with no clear winner. The technology is also becoming more accessible, making these capabilities available to anyone with moderate technical skills.

When AI Misunderstands Context

As multimodal systems become more capable and autonomous, ensuring they behave according to human values becomes increasingly complex. These systems interpret multiple information sources simultaneously and make decisions based on that interpretation. When they misunderstand context across modalities, consequences can be serious.

Healthcare AI recommending treatments, autonomous vehicles interpreting traffic situations, content moderation systems evaluating context—all face scenarios where multimodal misinterpretation could cause harm.

The challenge is that understanding context requires nuance that even humans struggle with. Building systems that reliably grasp this complexity across modalities remains an ongoing challenge with no perfect solution in sight.

What’s Coming Next

The current state of multimodal AI is impressive, but honestly? We’re still in the early innings. Several clear trends are emerging that will shape where this technology goes next.

Robots That Actually Understand What They’re Doing

The next major frontier combines multimodal AI with robotics—systems that don’t just understand multiple types of information but can act in the physical world based on that understanding.

Imagine robots that watch humans perform tasks, read relevant instruction manuals, listen to verbal corrections, and then execute the task themselves by integrating all this information. Not following rigid pre-programmed routines, but actually understanding what they’re doing.

Companies like Figure AI and Tesla are building humanoid robots powered by multimodal AI. These could transform manufacturing, caregiving, hazardous work environments, and space exploration. The key innovation is tight integration between perception (seeing, hearing) and action (manipulation, navigation).

We’re probably 5-10 years from seeing these widely deployed, but the foundation is being built right now.

AI That Learns From You Over Time

Current multimodal models are mostly static after training—they don’t continuously learn from interactions with individual users. Future systems will likely incorporate ongoing learning, adapting to your communication style, preferences, and needs while respecting privacy.

Your personal AI assistant might learn that you’re a visual thinker who needs diagrams, prefers direct communication, and works best in the morning. Over weeks and months, it becomes increasingly effective at anticipating what you need and how to present it.

This raises interesting questions about personalization versus standardization. Should everyone interact with the same base model, or should AI systems develop distinct approaches tailored to individual users? There’s no obvious right answer.

AI That Actually Reasons Across Modalities

Today’s multimodal AI excels at pattern recognition and association but struggles with abstract reasoning. It can recognize that certain visual, auditory, and textual patterns often appear together, but it doesn’t deeply understand why.

Future systems will likely develop stronger causal reasoning capabilities—understanding not just correlations but actual cause-and-effect relationships between different modalities.

This could enable AI to truly grasp metaphors that span modalities, understand subtle cultural references combining visual and linguistic elements, or explain scientific concepts by meaningfully connecting equations, diagrams, and intuitive explanations rather than just associating them.

Better Governance and Ethical Frameworks

As multimodal AI becomes more powerful and widespread, we’ll need stronger governance frameworks. The European Union’s AI Act provides one model, with regulations based on risk levels. Future policies might specifically address multimodal capabilities—requiring transparency in how systems process different data types, mandating bias testing across modalities, and establishing clear liability frameworks for AI-caused harms.

Industry self-regulation will also evolve. Major AI companies have begun coordinating on safety research and sharing best practices. As these systems grow more capable, this coordination may strengthen, potentially including shared evaluation benchmarks and standardized safety testing.

Making Powerful AI Accessible to Everyone

While cutting-edge multimodal models currently require massive resources, smaller and more efficient models are emerging rapidly. Open-source alternatives are gaining capability, and techniques like model compression and efficient fine-tuning are making powerful multimodal AI accessible to smaller organizations and independent researchers.

This democratization has two sides. Positively, it enables innovation from diverse sources and prevents power from concentrating in a few large companies. Negatively, it makes potentially harmful capabilities more widely available.

Balancing these tensions will require thoughtful policy, technical solutions, and ongoing dialogue between developers, users, policymakers, and affected communities.

How to Prepare for This Transformation

If you’re wondering how to prepare for this shift—whether as an individual, professional, or organization—here are some practical strategies based on what I’ve seen work.

Stay curious but maintain healthy skepticism. Understand what multimodal AI can and can’t do. Neither dismiss it as overhyped nor assume it can solve every problem. The reality is nuanced, and critical thinking about appropriate use cases remains essential.

Experiment in low-stakes environments. If you’re part of an organization, start with pilot projects that have clear success metrics. Test multimodal applications in controlled settings before large-scale deployment. Learn from failures without betting the farm.

Build AI literacy across your team. Understanding how to effectively use these systems will become a valuable skill across professions. This doesn’t mean everyone needs to understand the mathematics—but knowing how to prompt multimodal systems effectively, interpret their outputs, and recognize their limitations matters.

Think about ethics before deployment. Before implementing multimodal AI, seriously consider privacy implications, bias risks, and potential negative consequences. Include diverse stakeholders in these conversations, especially people who might be most affected by the technology.

Support responsible development. Choose tools and services from companies that prioritize safety, transparency, and fairness. Consumer choices and public pressure can meaningfully influence how these powerful technologies evolve.

Final Thoughts: We’re Writing This Story Together

Multimodal AI represents one of the most significant technological shifts of our generation. The ability of systems to see, hear, read, and increasingly understand our world has moved from science fiction to everyday reality faster than most people expected.

The potential benefits are genuine and profound. Better healthcare diagnostics. More accessible education. Technology that works for people with disabilities. Creative tools that expand human imagination. Business solutions that actually solve real problems.

But the risks are equally real. Bias amplification. Privacy erosion. Environmental costs. Misinformation at scale. The potential for surveillance and control.

Here’s what I keep coming back to: this technology will change everything, but how it changes things depends entirely on choices we make right now. Choices about what we build. Choices about how we regulate it. Choices about who benefits from it. Choices about what values we encode into these systems.

We’re all part of this story—developers building these systems, policymakers writing the rules, businesses deploying them, educators teaching about them, and individuals using them daily. The models that see, hear, and read are already here. What they ultimately do with those abilities is something we’re deciding together, one choice at a time.

The future isn’t something that happens to us. It’s something we create. And right now, we’re creating a future where AI understands our world through multiple senses, processes information in ways that mirror our own experience, and becomes increasingly integrated into the fabric of daily life.

That future holds extraordinary promise. It also demands extraordinary responsibility. Let’s make sure we get this right.

Want to dive deeper into multimodal AI? Check out the latest research from Gartner on multimodal predictions, McKinsey’s comprehensive explainer, and the NIH’s multimodal AI initiatives for healthcare applications.

Subscribe to our newsletter for weekly insights on AI, technology, and how these tools are reshaping our world.

From Data to Decisions: Predictive Analytics for Business

October 31, 2025 @ 3:27 pm

[…] Related topic: The Rise of Multimodal AI […]